While Microsoft, and others, have an assortment of Azure AI services, anyone who has gotten down and tried to build complex application flows with them will attest it is a challenge. Building these flows takes time and high degree of understanding of the services and their parameters but, what if there was a better way?

The way I like to think of Semantic Kernel is “use AI to do AI work” – that is, much like how we can leverage AI to explore ideas or more efficiently learn a new aspect of programming, let us have AI figure how to call AI. And that is what Semantic Kernel purports to achieve – and does so.

Semantic Kernel is an Open-Source AI orchestration SDK from Microsoft – Github. The main documentation page can be found here. The community is highly active and even has its own Discord channel.

Creating a Simple Example

The link above features a great example of wiring up Azure OpenAI service in a GPT style interface to have a history-based chat with a deployed OpenAI model.

On the surface, this looks like nothing more than a fancier way of calling OpenAI services and, at the most basic level, that is what it is. But the power of Semantic Kernel goes much deeper when we start talking about other features: Plugins and Planners.

Let’s Add a Plugin

Plugins are extra pieces of code that we can create to handle processing a result from a service call (or create input to a service call). For our case, I am going to create a plugin called MathPlugin using the code-based approach:

This is all standard C# code with the exception of the attributes used on the methods and the arguments. While not relevant right now, these play a vital role when we start talking about planners.

Our function here will calculate the factorial of a given number and return it. To call this block, we need to load it into our kernel. Here is a quick code snippet of doing this:

Here we create our Kernel and use the AddFromType method to load our Plugin. We can then use the InvokeAsync method to call our code. In my case, I passed the number 5 here received 120, correctly, as the response.

What is the use case?

Our main goal here is to support writing code that may be more accurate at doing something, like calculation, than an LLM would be – note, factorial works fine in most LLMs, this was done as an example.

Cool example, but it seems weird

So, if you are like me, the first time you saw this you thought, “so, thats cool but what is the point? How is this preferable to writing the function straight up?”. Well, this is where Planner’s come into play.

Description is everything

I wont lie, Planners are really neat, almost like witchcraft. Note in our MathPlugin example above, I used the Description attribute. I did NOT do this just for documentation; using this attribute (and the name of the function) Planners can figure out what a method does and decide when to call it. Seem’s crazy right, let’s expand the example.

First, we need a deployed LLM model to make this work – I am going to use GPT 4o deployed through Azure OpenAI – instructions.

Once you have this deployment, and the relevant information you can use the extension method AddAzureOpenAIChatCompletion you can update your code to add this functionality into your kernel:

This is one of the main strengths of Semantic Kernel, it features a pluggable model which can support ANY LLM. Here is the current list, and I am told support for Google Gemini is forthcoming.

Let the machine plan for itself

Let’s expand our MathPlugin with a new action called Subtract10 that, you guessed it, subtracts 10 from whatever number is passed.

Here we have added two new methods: Subtract10 (as mentioned) and Add which adds two numbers together. Ok, cool we have our methods. Now, we are going to create a Planner and have the AI figure out what to call to achieve a stated goal.

Semantic Kernel comes with the HandlebarsPlanner in a prerelease NuGet package:

dotnet add package Microsoft.SemanticKernel.Planners.Handlebars –version 1.14.1-preview

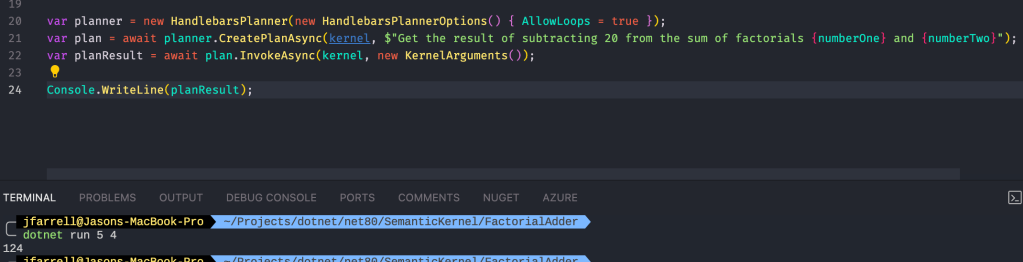

Once you have this, we can use this code to call it:

The call to CreatePlanAsync this creates a set of steps for the Planner to follow, it will pass the steps to your registered LLM model to deduce what is being requested. For debugging (or reporting) you can print these steps out by outputting the plan variable. Here is what mine looks like:

Reading through this is pretty insane. You can clearly see the AI figuring out what is being asked and THEN figuring out which plugin method to call. This is what is meant by AI Automatically calling AI. By how?

Recall the Description attributes in MathPlugin on both the method and parameter. The AI is reading this and through this information knowing what to call. And this goes even further beyond what you think, watch this.

Change the goal to indicating you want to subtract 20. Again, no code changes. Here is the outputted plan.

If you look closely at Step 6 you can see what is happening. The Planner AI was smart enough to realize that it was asked to subtract 20 BUT only had a plugin that can subtract 10 so… it did it twice. That is INSANE!!

Anyway, if we modify the code to actually invoke the plan (we will change the request back to subtract 10) we can see below we get the correct response (134).

What if I want to use an AI Service with a Planner?

So far we have looked at creating a plugin and calling it directly. While interesting, its not super useful as we have other ways. Then we looked at calling these plugins using a Planner. This was more useful as we saw that we can use a connect LLM model to allow the Planner to figure out how to call things. But, this is not what Semantic Kernel is trying to solve. Let’s take the next step.

To this point, our code has NOT directly leveraged the LLM; the Planner made use of it to figure out what to call but, our code never directly called into LLM – in my case GPT 4o, lets change that and really leverage Semantic Kernel.

Prompt vs Functional Plugin

While not officially coined in Semantic Kernel, plugins can do different things. To this point, we have written functional plugins, that is we have written plugins which are responsible for executing a segment of coding. Now, we could use these plugins to call into the deployed OpenAI model but, this is where a prompt plugin comes into play.

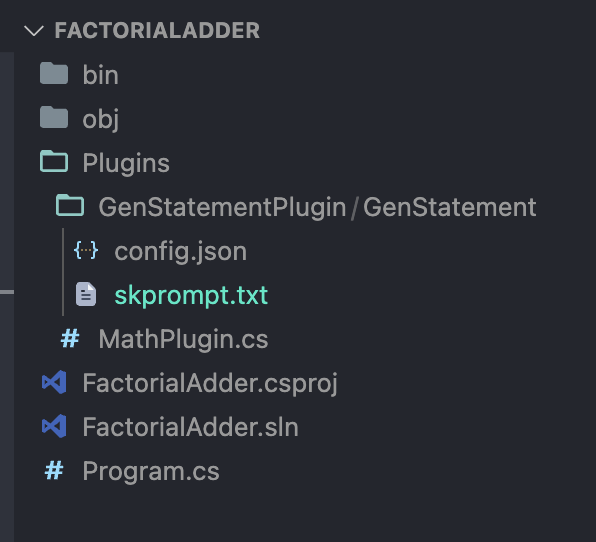

Prompt plugins are directory based, that is, they are loaded as a directory with two key files: config.json and skprompt.txt.

config.json contains the configuration used to call the connected LLM service. Here is an example of the one we will use in our next plugin.

This plugin is going to generate a random statement from the LLM (OpenAI GPT) in this case that uses the number that is generated using the MathPlugin functions. Again, the descriptions of these values are CRUCIAL as they allow the LLM to deduce what a function does and thus how to use it.

The skpromot.txt contains the prompt that will be sent to the LLM to get the output. This is why this is referred to as a Prompt Plugin – you can think of this as encapsulating a prompt. Here is the prompt we will use:

Notice the use of the {{$number}} which is defined in the config.json above. This prompt will be passed to OpenAI GPT to generate our result.

Both of these files MUST be sored under a directory that bears the name of the action, which is under a directory bearing the name of the plugin. Here is my structure:

In case you were wondering, the name of the plugin here is GenStatement thus it is under the GenStatementPlugin folder.

Last, we need to add this plugin to the kernel so it can be used:

We are now ready to see our full example in action. Here is the output from my script with the updated goal:

As you can see, we are getting a (somewhat) different response each time. This is caused by having our temperature value in the config.json used to call OpenAI set to near 1, which makes the result more random.

Closing Thoughts

AI using AI to build code is crazy stuff but, it makes a ton of sense as we get into more sophisticated uses of the AI services that each platform offers. While I could construct a pipeline myself to handle this, it is pretty insane to be able to tell the code what I want and have it figure it out on its own. That said, using AI to make AI related calls is a stroke of genius. This tool clearly has massive upside, and I cannot wait to dive deeper into it and ultimately use it to bring value to customers.

One thought on “Getting Started with Semantic Kernel”